Overhyped AI in Biotech

TL;DR: AI is not being effectively utilized by biotech startups; analyzing GWAS using logistic regression or MLPs is not AI, and questionable companies are riding the wave to inflate their valuations. When these hit the public markets, and eventually some fail trials, a lot of value will have been lost.

The Setup

I'm a current Harvard student studying neuroscience and have done computational biology research for the last 8 years. I read a textbook on healthcare finance and have been investing in biotech since.

My view of AI in bio has drastically changed over the last two years. I am not one of those AI denialists or doubters; in fact, I'm quite the opposite.

In middle school, I was learning natural language processing (NLP), which later had me introduced to BERT and similar models. For those not fluent, BERT was a language model by Google in 2018 that used encoder-only transformers for unmasking and next sentence prediction. In other words, it was a major leap on the road that led us to the GPT models.

I remember playing around with GPT-2 on Hugging Face and being absolutely blown away. This was back in 2019 when no one was talking about "generative AI" (I don't like this term) in the public domain, so no one could tell if I was ever using GPT-2 to assist with my work. This led me to early days playing with AI Dungeon, and eventually, GPT-3 (IYKYK - completions API was so powerful).

Seeing this dramatic increase in capability, along with powerful breakthroughs in reinforcement learning and CoT has led me to be very bullish on scale. There will be multiple ways to scale to a superhuman-level reasoner. As of the time of writing this blogpost, Grok 4 has not been released, but leaks claim it scores 35-45% on Humanity's Last Exam (HLE). As a contributor to the HLE eval, this is remarkable to me, and quite frankly scary.

If the Grok leaks are true, it means that getting 200,000 H100s lets you create a superhuman-level reasoner across a multitude of domains. Why superhuman? HLE's questions are literally unanswerable by the normal human (AGI). If you have PhD-level knowledge in a domain, you can answer a few questions, maybe with the help of Google & research.

In addition to these notable circumstances in large language model development, I have done bio ML research previously. I previously designed codon optimization algorithms for E. coli and CHO that could improve expression levels using recurrent neural networks and transformers. These beat out state-of-the-art techniques made by industry players. I closely monitored Isomorphic Labs' progress, and the amazing work by Arc, Deepmind, EvoScale team, etc.

And yet, I am deeply pessimistic about the current state of "AI in biotech." The field is caught in a hype cycle so profound that it threatens to poison the well for years to come. This is incredibly dangerous given the trough that biotech is currently in, with poor multipliers and lack of funding.

Quick stop at Google and Meta

It’s tempting to point to breakthroughs like AlphaFold as signs that AI has solved core problems in biotech. AF2 and 3 are generational leaps in protein structure prediction, and AlphaFold-Multimer extends this to modeling complexes.

But, neither was built to predict binding affinity, off-target effects, or immunogenicity.

That’s where models like ESM come in--Facebook/Meta’s Evolutionary Scale Modeling effort (which has been spun out) has demonstrated that transformer-based models trained on millions of sequences can learn embeddings predictive of functional properties, including binding.

Thus, what we have today are preclinical triage tools. These are highly useful for narrowing candidate pools, but not capable of replacing downstream assays, in vivo validation, or true systems biology modeling.

The hard bottlenecks like target selection, toxicity, ADMET profiling etc. remain elusive. Some of these might be hidden behind the comp. chem teams' curtain at large biopharmas where the best data still lives. Hence why I'm bullish on larger AI/data giants' ability to get access to (long $GOOGL) e.g. Isomorphic Labs theoretically has the capital and network to gain access to proprietary clinical and preclinical data from Lilly & Novartis etc.

What is the current "AI in biotech" hype though?

The fundamental problem is one of definition. When a biotech company claims to be "AI-driven," what does it actually mean? In the vast majority of cases, it means applying decades-old statistical methods and calling it frontier technology.

Take InSilico Medicine.

InSilico is private, and valued at well over $1B+ with an IPO coming in Hong Kong. The valuation is not a Theranos, but misguided. I believe the firm is actually worth closer to $700M based on their current pipeline. Let me explain.

InSilico Medicine is piloting their TNIK inhibitor drug, one of their most promising candidates. They published a Nature Biotech article about their process to discover this, and claim it is heavily influenced by AI. Their abstract mentions their use of AI three times, and paints a picture that AI had a huge impact on the creation of their drug.

Yet, the AI-generated target TNIK they found was subjected to traditional drug development: kinase assays, molecular docking, lead optimization, and animal studies. AI did not help the firm predict any structure-activity relationships, off-target effects, or toxicity. Now this is the norm.

But even on the generative chemistry side: the process wasn’t “generative” in the sense of GPT or LLM-like novelty. It was scaffold-hopping with AI helping tweak substituents. This has been done by medicinal chemists for decades and doesn't remain a bottleneck. Calling this AI-designed is a branding play.

On the target selection, they claim AI-derived ranking of potential fibrosis targets. What companies like Insilico call “AI” here is, in many cases, just old-school statistics with a modern rebrand. In this paper, the so-called AI pipeline consists of heterogeneous graph walks, text trend mining, and causal inference over omics data. Let’s be honest: this is not artificial intelligence in any meaningful sense. Heterogeneous graph walks are essentially like a PageRank algorithm for protein networks; text mining is glorified keyword frequency analysis across PubMed; and “causal inference” here boils down to linear regressions embedded in pathway enrichment pipelines. There’s no emergent intelligence from training on billions of sequences from evolution.

Claiming the process took “only 18 months,” and heavily implying AI played a role is misleading. The time savings are likely from organizational optimization, and the fact that preclinical seems to have been conducted in China, rather than true AI acceleration. AI didn't remove the bottlenecks.

Towards the latter end of their analysis, they incorporate GWAS and multi-omics data using what they label as “AI techniques.” In practice, this likely involves network-based scoring methods, causal inference frameworks, and dimensionality reduction. These are valuable and well-established methods, but then pitching the company as a generative AI player is a categorical error. It’s like calling a calculator a “supercomputer”: technically accurate in the broadest sense, but completely misleading in spirit. What’s missing is any semblance of reasoning, generalization, or emergent capability like you see in the large ESM models.

The pitch is seductive: Our proprietary AI engine will slash drug discovery timelines, cut R&D costs, and increase the probability of success. The reality is that the "AI" component often plays a minor, preliminary role. The heavy lifting, the slow, expensive, and failure-prone work of wet lab validation, pre-clinical animal studies, and human clinical trials, remains largely untouched. The AI isn't driving the science; it's driving the fundraising.

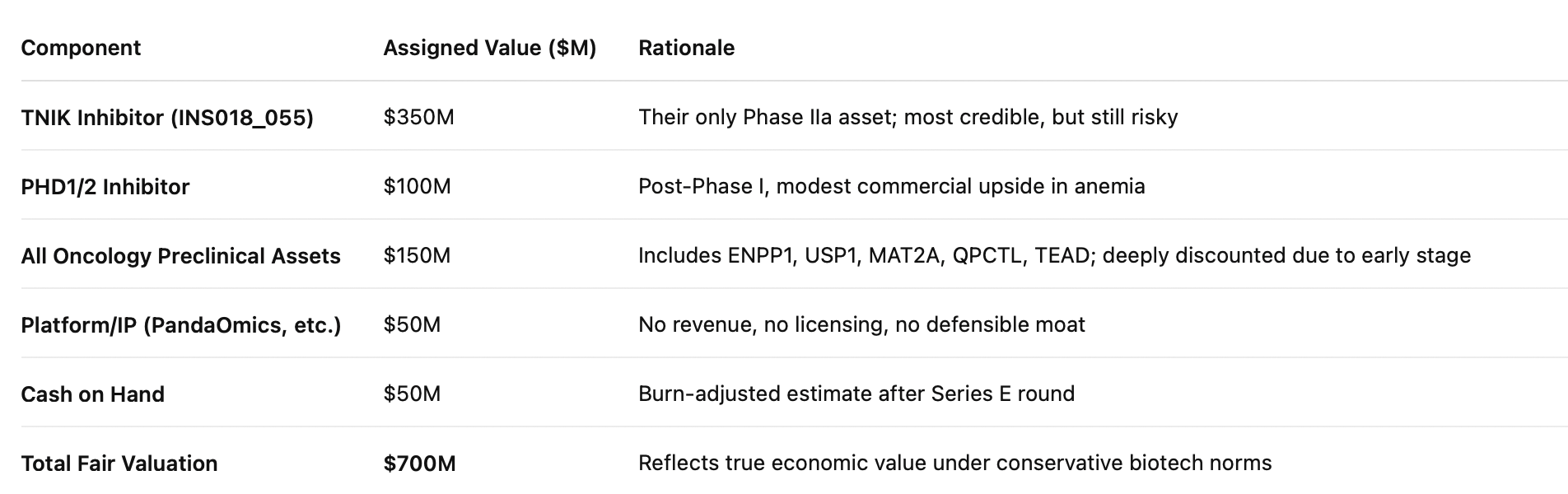

I'm not a life sciences banker, so here's a quick excerpt from ChatGPT performing an analysis and valuation of their candidates:

So what's real, then?

The true promise of AI in biology is to model the complexity of the cell. It's clear to me that this is the future of AI in bio, and hence why leaders like Arc Institute are focusing efforts on creating the "virtual cell" which will be a holy grail.

Groups like the Chatterjee Lab at University of Pennsylvania are working on what is a first step - de novo generating peptides, and then using tools like AlphaFold and ESM to evaluate binding characteristics to help screen sequences to give you a list of promising sequences.

But, even a promising sequence must be synthesized, expressed in a cellular system, purified, and then rigorously tested in vitro for binding and function. If it passes, it moves to in vivo testing in cells, then animals, to assess its safety and efficacy. Each of these steps is an enormous filter where the vast majority of candidates fail.

This is not to say that the research is meaningless, however. In fact, the work coming out of the Chatterjee Lab represents some of the most rigorous and technically impressive applications of modern AI to peptide therapeutics. Their recent pipeline, PepTune, uses a discrete diffusion model guided by Monte Carlo Tree Search to design peptides optimized across multiple therapeutic properties - an actual multi-objective optimization engine, not just a buzzword.

PepPrCLIP goes a step further, combining ESM latent perturbations with a CLIP-style contrastive model to generate peptide binders against conformationally diverse, even unstructured targets, using nothing but sequence data. And PepDoRA provides a cross-modal embedding space unifying peptides and small molecules, enabling better downstream property prediction across previously siloed domains. This isn’t repackaged logistic regression.

These are principled architectures designed to generalize, scaffold, and accelerate truly novel bioengineering. These guys are taking the full advantage of the large datasets and large models.

But here is the inevitable trajectory: overhyped, AI-branded biotech companies will continue to go public at staggering valuations. Their assets, born from so-called "AI platforms," will enter the unforgiving gauntlet of clinical trials. And many of them will fail. They will fail not because the AI was faulty, but because the AI right now doesn't fundamentally solve the bottlenecks in the process. When these high-profile failures hit, the narrative will flip.

Public market investors will realize they weren't buying into a technology revolution. Stocks will plummet, and billions of dollars in shareholder value will be incinerated. The danger is that this fallout won't just punish the guilty. It might create a deeper biotech AI winter, where investors become even more skeptical of all companies in the space.

Disclaimer: I am a student who is learning, and these are my opinions. All comments & feedback are welcome.

No spam, no sharing to third party. Only you and me.

Member discussion